Note

This technote is not yet published.

2019 saw significant changes have taken place in IT. Here we address the team structure and responsibilities. We put forward some planning and start on an estimate to complete for IT.

1 Introduction¶

In this document we layout the IT approach to the Rubin Observatory construction. It will refer to other specific tech notes for details.

We wish to build a modern approach to IT just close enough to the cutting edge to allow us remain current at the start of operations but preferably not quite the bleeding edge. To best support deployment we must have an element of research and development in the IT team.

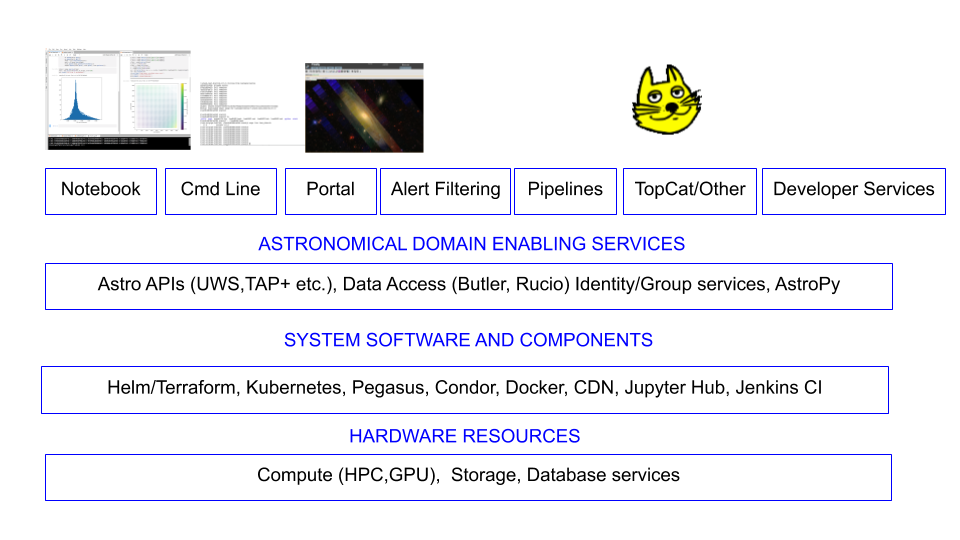

The general Cyber Infrastructure approach of Rubin Observatory is layered as in the diagram Cyber Infrastructure from [1]

Vera Rubin Observatory Cyber Infrastructure stack.

2 Organization¶

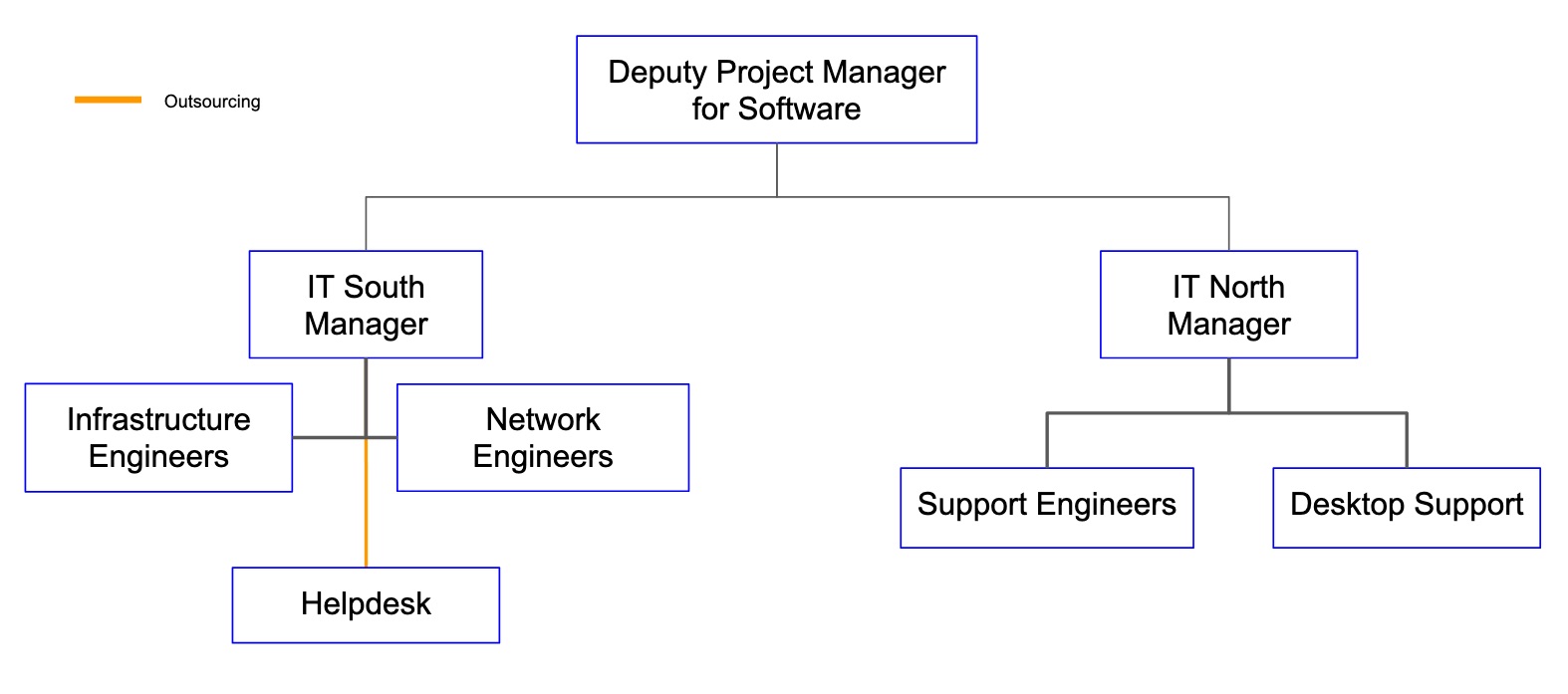

The IT group in Rubin Observatory has the following organization:

IT north and south share a single Jira system where tickets can be tracked.

The IT managers north and south tag up on Monday with the deputy project manager for software to discuss issues concerning IT in general.

The IT manager south, DF Manager, T&S Software Manager and DM SQuaRE manager tag up with the deputy project manager for software to discuss cross cutting issues and priorities.

2.1 IT North and South and interactions¶

IT North and South meet every week on Monday to discuss current and future work of the network.

2.2 IT South¶

The IT South group is divided in 3 main areas: Infrastructure, Networking and Helpdesk.

Infrastructure and Networking provide support and development to the production environment of Rubin Observatory.

Helpdesk support is provided by a contractor, covering both sites (base and summit).

To organize work, there are several scheduled meetings covering all the aspects of the IT operations in the South.

- Daily group standup: Everyday at the beginning of the day, each team member reports in Slack the planned work for the day.

- Weekly Devops standup: Videoconference/In person meeting happening 3 days per week to discuss infrastructure related work.

- Weekly Network standup: Videoconference/In person meeting happening 3 days per week to discuss network related work.

- Weekly IT group meeting: Videoconference/In person meeting to discuss all work of the week and upcoming work.

- Monthly tickets clean: Videoconference/In person meeting to review and close Jira tickets.

2.3 IT North¶

The IT North group (aka Tucson group) has three primary roles: deliver PMO provided services, Helpdesk, Tucson on-site and particular off-site meeting support.

PMO provide services include Atlassian Suite, Docushare, Teamwork Cloud & Magic Draw, PDM/Solidworks.

Helpdesk support is for all of the users of the various web based services, and particulare hardware support of end users and particular specialized groups.

Meeting support consists of assisting meeting owners with AV and technical inside the Tucson building, general contractor and technical contact for PMO sponsored meetings or reviews, or technical advisor for meetings sponsored by other institutions.

Under a steady state, our group uses rubinobs-it-tucson email, Slack, and Jira’s comments and work log to keep informed. Our group is physically located in a single office and can meet regularlary when necessary. We also meet for one-on-ones related to training or particular questions or issues.

3 Physical Locations¶

3.1 Base Data Center¶

The Rubin Observatory Base Datacenter is located in La Serena, Chile, in a shared location with other AURA programs. However, Rubin Observatory maintains full independence of administration and design of its cabinets. The logical design of the cabinets is based on top-of-rack switching, meaning that devices connect to a switch located at the top of each rack, facing to the back to facilitate its cabling. To avoid lengthy slacks of network cables, they are adjusted to fit the required length. All network cables are re-certified for cat6 after adjusting. The building was built on top of a seismic base isolation and features:

- Keyless access control

- 39 redundant circuits connected to two UPS

- 11 simple circuits connected to a single UPS

- Climate control system with a power of 235kw-

- Two UPS of 400kva each.

Network infrastructure deployment can be reviewed in the following links:

3.2 Summit Data Center¶

Summit Data Center is located in Cerro Pachon, Chile. The racks are designed with top-of-rack switching and network cables are adjusted and re-certified to the necessary length. The Data Center has the following features:

- Keyless access

- 20 non-redundant circuits

- 1 UPS of 750kva

- Generator of 800kva, with a capacity of 1000 liters plus 32000 liters in an external pump.

Network infrastructure deployment can be reviewed in the following links:

3.3 Tucson Lab(s)¶

Tucson lab(s) should resemble Chile as much as possible in terms of network and deployment.

A Kubernetes cluster is deployed as a testing stand with the same deployment done in Chile. This allows users to test tools under similar conditions of what can be found in the clusters deployed at Chile.

3.4 Tucson Data Center¶

The Tucson Data Center is maintained by NOIR Lab (formally NOAO) and is located in Tucson, AZ in the main building. RubinObs PMO services reside within two racks. PMO has a an empty rack there are racks for 3.3 Tucson Lab(s).

Power, networking, and physical security are provided by NOIR Lab.

4 Planning¶

This technote serves as a roadmap for Rubin IT activities. Several projects will be initiated to accomplish what has been laid out in the technote.

The planning of the projects are being followed in two Jira projects:

- IT Plan: Main Jira project to follow the progress of IT projects spawned from this technote. Primavera pulls updates from this project.

- IT: This Jira project mixes Epics that are created from ITP and Epics from current operational issues.

Priorities of projects can be viewed here: https://confluence.lsstcorp.org/display/IT/IT+Chile+Priorities

These new projects imply an extra expense in the Rubin IT budget. Details of these expenses can be found in The Financial Forecast report.

5 Areas of work¶

Rubin IT divides its work in several areas to provide effective solutions for the observatory. The following are the main areas where IT provides support and development.

5.1 Networking¶

To accommodate the demanding requirements of the computing and storage deployments of Rubin Observatory, IT provides a highly available and scalable network design. The network is logically separated in Campus and Control, both networks have a backplane of redundant 100GB links, and maintain the concept of TOR to connect devices.

For details of the Network design please review Network Infrastructure High-Level Design

Wireless device are controlled from a central location and connected to an identity management system to authorize users and route them to their correct network segment.

For details of the Wireless infrastructure design please review Wi-Fi Infrastructure High-Level Design

La Serena building has mostly Rubin Observatory Access Points, hence there is integration with NOIRLabs networks. Users can log in to the corresponding SSID of their program, authenticate against their backends, and use their gateways.

For details of the WiFi integration please review Wireless Integration with NOIRLabs

5.2 Desktop Support¶

IT provides desktop support to Rubin Observatory staff for all hardware and software requests. Support is provided in a timely and efficient manner. Helpdesk agents are responsible for deploying customized versions of desktop operating systems from a central repository. They are capable of installing additional software without major interruptions to the user.

Helpdesk agents are also responsible of keeping the inventory system up to date, creating documentation, and contributing to the creation of new policies.

The Helpdesk project in Jira, allows to assign tickets to IT north or south, depending on the requirement.

To review how to file tickets please visit [link to technote]

IT also provides remote support, which has been used during the Pandemic of 2020. To review details of remote work please visit [link to technote]

For more details about Helpdesk please review [link to tech doc]

5.3 Monitoring¶

IT provides monitoring metrics for the network, servers, services, and environmental variables of the Rubin Observatory datacenters. Metrics are collected by different sources such as app collectors and SNMP queries. The visualization of these metrics are managed by an open-source dashboard, which contains sub-dashboards with groups of servers. The access of the sub-dashboards is delegated to groups of users with common monitoring needs. The monitoring platform is deployed in a reliable, maintainable, and repeatable platform, to guarantee its operation in a stable state. The platform trigger notifications of outages and thresholds, that are managed by a central alerting system forwarding them to several Rubin’s communication channels.

For details of the platform, please review https://ittn-007.lsst.io/

5.4 Infrastructure¶

IT manages infrastructure as code when possible. It is operated in a continuous delivery and continuous integration pipeline. Systems are orchestrated from a central platform, allowing a rapidly scalable infrastructure and minimizing human errors by automating deployments. Rubin Observatory software, when possible, is fully integrated into this platform.

Production environment consists of virtual machines and containers running in physical clusters. Testing and development infrastructure has local resources but also leverages cloud computing resources.

The platform utilized to deploy infrastructure includes:

- Infrastructure automation - Puppet

- Orchestration - Kubernetes

- Automated user provisioning - FreeIPA

The objective is to provide highly available systems and rapidly scalable platforms.

The project in general favors open source software but uses licensed software when is the best option.

For details about the deployment platform please review https://ittn-002.lsst.io/

For details of the Puppet standards please review https://ittn-005.lsst.io/

5.5 Authentication and Authorization¶

Rubin Observatory maintains 2 types of accounts, organization accounts (typically email) and production environment accounts. The user is the same but the system managing the accounts are separated. IT provides 2FA and sudo escalation upon approval. The administration of the accounting is done in a centralized platform, servers or services register to this platform after deployment. To streamline access to resources and to promote self-service, the granting of access is delegated to a responsible or point of contact of such servers or services. IT is always capable to provide access but the approval must always be done by the responsible of the server or service.

For details of the accounting implementation please review https://ittn-010.lsst.io/

5.6 Storage¶

Rubin IT has designed a storage platform with the following features:

- Highly available

- High performance

- Scalable

Storage has been designed in several tiers to provide different level of services.

5.7 Cybersecurity¶

Rubin IT secures and protect technological resources of the Rubin Observatory, using industry standards, best practices, and cybersecurity policies. The focus of IT efforts is to keep confidentiality and integrity of the information, but also to increase the cybersecurity awareness of the users by providing training and policies.

IT enforces policies and keep systems up to date to strengthen the security of data and systems.

5.8 Backup¶

IT provides data and disaster recovery services to the production environment of Rubin Observatory. The backup platform is capable to recover historical configuration data from up to 6 months. Scientific data is not included in this backup platform.

Historical data is stored in virtual library tapes, there’s also a smaller staging area to hold backups for a few days before they are sent to historical archiving. The staging area allows the quick recovery of files due to bugs, crashings of systems, or human errors.

For details of the backup platform please review [link to technote]

5.9 Video Conference¶

Rubin IT offers video conference support in Rubin’s facilities. The system is currently independent from NoirLab platform, but will integrate as Rubin enters to operations.

At the moment, the video conferencing system is based on Blue Jeans.

5.10 Long Haul Network¶

The LHN is a dedicated network to transport science data to the USDF. For details of it please review Rubin Observatory Network Design.

Rubin IT provides support to execute tests during the construction phase of the Observatory, and also collaborates with other members of the NET-LHN team to troubleshoot problems detected in the network path to the USDF.

6 Site Reliability Engineering¶

Rubin IT adopts the Site Reliability Engineering methodology of work. Operations is approached as a software problem, engineers in the IT group use the following principles to operate:

- Service Level Objectives: Services are managed by objectives, which are defined by all the stakeholders of such service. IT engineers follow these SLOs and act when these are not met.

- Automation: Repetitive tasks are automated. There are still tasks that won’t be possible to automate but the objective of the IT team is to automate as much as possible.

- Share ownership with developers: There are no rigid boundaries between IT engineers and software developers. IT is more focused in production problems rather than the logic of the software.

Details of the SRE implementation can be reviewed here [link to technote].

7 Documentation¶

Rubin IT provides procedures, policies, and guides of the Rubin Observatory network. The content is distributed in the following locations:

- Docushare: Official Rubin Observatory documentation repository

- Confluence: General knowledge base documents of services provided by IT

- Technotes: Technical documents with details of technologies used in IT

8 Interactions¶

IT has the following interactions:

- DM: Weekly tag up with DM manager, SQuaRE and T&S

- Summit Crew: Twice per week meeting with managers of different groups

- NoirLab: Monthly meeting with ITOPS manager

- Site Maintenance and Management: Weekly meeting with several managers.

9 Open Issues¶

- BroTaps - to OIR Lab and move to outside network (currently on internal network)

- Chilean DAC and possible Amazon link (Chilean Data Observatory)

10 Acronyms¶

| Acronym | Description |

|---|---|

| 2FA | Two-factor authentication |

| AURA | Association of Universities for Research in Astronomy |

| CI | Continuous Integration |

| DAC | Data Access Center |

| DF | Data Facility |

| DM | Data Management |

| DOI | Digital Object Identifier |

| IT | Information Technology |

| ITIL | Information Technology Infrastructure Library |

| ITTN | IT Tech Note (Document Handle) |

| LHN | Long Haul Network |

| LSE | LSST Systems Engineering (Document Handle) |

| LSST | Legacy Survey of Space and Time (formerly Large Synoptic Survey Telescope) |

| NoirLab | National Optical-Infrared Astronomy Research Laboratory |

| OIR | Optical Infrared |

| SQuaRE | Science Quality and Reliability Engineering |

| SRE | Site Reliability Engineering |

| T&S | Telescope and Site |

| TOR | Top Of the Rack |

| UPS | Uninterruptible power supply |

| URL | Universal Resource Locator |

| USDF | US Data Facilities |

References

| [1] | William O’Mullane, Niall Gaffney, Frossie Economou, Arfon M. Smith, J. Ross Thomson, and Tim Jenness. The demise of the filesystem and multi level service architecture. arXiv e-prints, pages arXiv:1907.13060, Jul 2019. arXiv:1907.13060. |